Science is a process, not a result. Failure is feedback

Meta’s Marketing Science team explored the utilisation and impact of experimentation on ad performance. Their landmark paper analysed 10,000 experiments over a 12-month period across all verticals.

The Conclusion:

Brands with a testing framework saw a 2-3% uplift in performance per experiment. Not only that, advertisers that ran 15 in a given year reported a 30% total increase.

A significant weapon in any media buyer’s arsenal then, but wait, the same research revealed only 12.8% of those studied actually make use of experiments.

An advertiser that ran 15 tests (versus none) in a given year sees about a 30% higher ad performance that year; those that ran 15 tests in the prior year see about a 45% increase in performance, highlighting the positive longer-term impact of experimentation.

Meta told Harvard Business Review the 4 main reasons brands were not testing.

👎 Organisational Inertia: Your company shows scepticism over the data or has a well-established attribution model in place

👎 Reduction of Campaign Reach: Running an experiment means creating a ‘holdout’ group that receives no ads. It’s essential for unbiased results, but inevitably reduces overall reach

👎 Lack of Alignment: There’s multiple internal and external stakeholders making it difficult to collaborate

👎 Established Decision-making: Your focus is on the short-term, with decision-makers overestimating the complexity of setting up an experiment

A cocktail of corporate ideology, ego and bias. If you want to avoid the bar and dodge the hangover, read on.

Don’t be the victim of your environment, be the architect

Now we have compelling evidence to support testing and experimentation, I will share 5 key principles from the system I built managing over £500 million in Google and Facebook ads.

Data-led decision making 🧑🔬

Decisions governed by gut are more dangerous than the industry would like to admit. They do not seek good explanations or permit an experimentation process for correcting errors and misconceptions. We can do better.

A simple heuristic: avoid opinion when data is available.

Often overlooked in busy businesses with benevolent bosses; data-first is much harder to implement than it seems. But it’s worth the effort. Next-time your boss asks what’s working, avoid the urge to respond instantly and instead interrogate the data and provide quantitive insights. Delayed gratification backed with data will set you apart from your peers.

With data replacing dogma, let’s look at the positivity of alternatives.

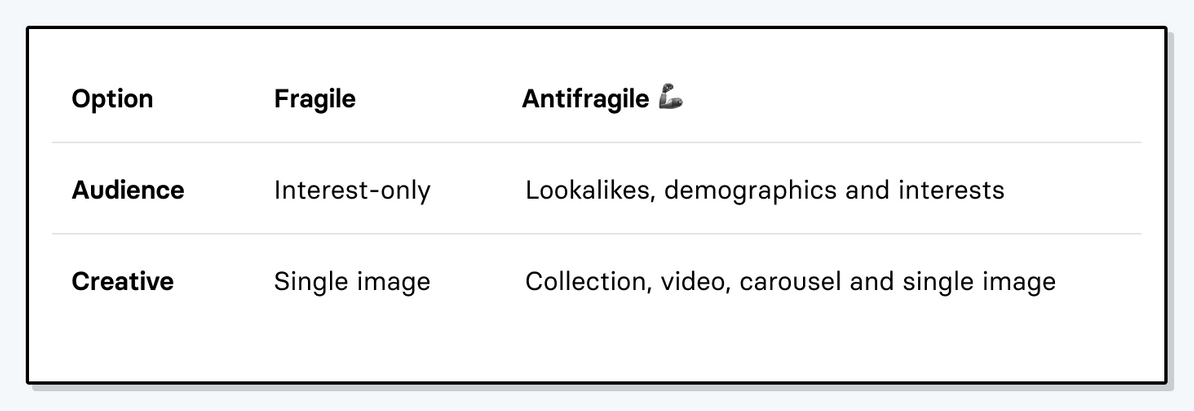

Options = antifragility 🦾

Having options gives you more upside than downside, enabling you to select what fits best — discarding the rest.

Many things we think come from skill, come largely from having options. Systems with options thrive in unpredictable environments, they are antifragile. A mantra of mine: the counteragent to volatile ad platforms is optionality.

Ad accounts without options are like highways without exits — devoid of turning. You need the ability to change course opportunistically and “reset” your options when things are underperforming.

Disrupt or deterioate 🚀

The idea of injecting random noise into a system to improve its functioning has been applied in many fields (see: metallurgy). Unfortunately, ad accounts — like our economic systems — are deprived of such practices. I blame the obsession with iteration and ever-pervasive literature around compounding effects.

‘Creative plateau!?’ responds a perplexed media buyer whose run the same image 50 times with different copy. Marginal gains are good until they aren’t. Stability is a time-bomb with hidden vulnerabilities and noise is your remedy to audience and ad attrition.

The next time your creative team asks you for a brief, remember: big changes = big outcomes.

The explore-exploit error 🧑🎨

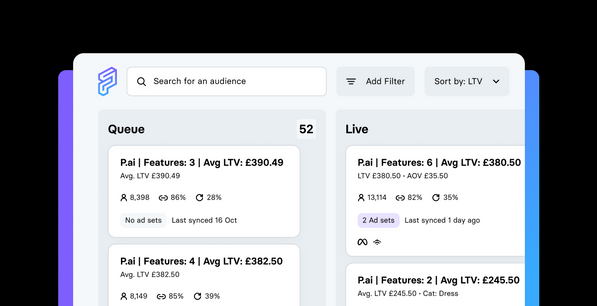

Simply put, exploration is gathering information, and exploitation is using the information to attain a known result. Launch a new audience — that’s exploration. Scale spend on said audience — that’s exploitation.

Tension between acting on one’s best knowledge and gathering more is a conundrum our cave cousins grappled with. But thanks to bandit algorithms this tradeoff has a solution.

What’s a multi-armed bandit problem? I hear you ask.Well, let’s say you’re concluding a two-week vacation in Canada. Saturday is your final night to eat out. Do you pick a favourite from those you’ve visited already, or try something new? That’s a multi-armed bandit problem — and computer science can help you decide!

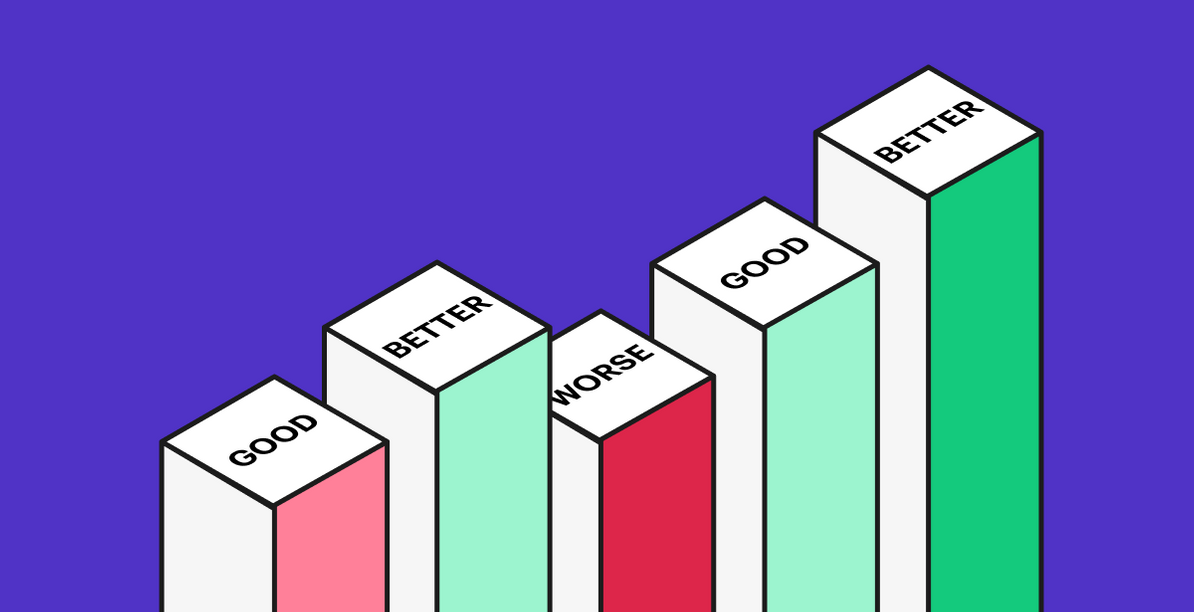

Observing ad accounts for over a decade, I believe the majority of my peers tend to over-exploit and under-explore. When A/B testing, for example, a common approach is to split evenly between two options. But this method can mean half the test is stuck getting an inferior option for as long as the test continues. Their oversight is your opportunity. Use multi-armed bandits and the magic of math to take more frequent and better shots.

With an optimal structure in place, preventing you from destroying dollars, let’s conclude with post-experiment behaviour.

History holds the coordinates 🧭

Contextual change logs in marketing are as rare as hen’s teeth. Intelligent ones I mean, not Meta or Google’s change history pages. A powerful tenet in your toolkit; the change log has many hidden properties — my two favourite being:

Environmental Data: Remember that time you spent a whole day analysing poor YoY performance and were still non-the-wiser? Then your anxiety triggered as another agency received access to your ad account! We’ve all been there. My most recent experience was with a global ice-cream brand who forgot to tell me that during the same period LY, they ran TV ads!

Port Knowledge: Outgrowing an agency or replacing staff is very common but transferring knowledge between old and new isn’t. Which agency/hire do you think has a better chance of success, if upon handover they receive:

A: Observations, experiments, change logs, call minutes, meeting notes, etc.

B: Nothing 😬

There’s an ever-expanding library of tacit knowledge out there. Ignoring it will stall your growth. The industry is partly to blame here. It has created a quagmire of quantitive analytics tools — for better or worse — and neglected its qualitative sister. But in the absence of tooling, I implore businesses to create their own black box and reap the rewards of record keeping.

Importantly for individuals, a career is not built from an instruction manual. You will not grow by making zero mistakes. But my point is to learn from others and avoid making the same ones yourself.

Capturing critical data is fundamental. You’ll want to complement this with contextual insights. One without the other is like Zuck without Sandberg.

"Science is what we have learned about how to keep from fooling ourselves [...] And you are the easiest person to fool."

For the astute reader it will be apparent these five favoured virtues of mine fit nicely with a popular experimentation method known as the scientific methodology. For those unfamiliar, it’s the mother of experimentation.

I was sad to learn that only 12.8% of us make use of experiments. But I’m hopeful with improved awareness and better tooling this will change.

Overlook experimentation at your peril, as achieving out-sized successes will be impossible without testing your way to it.

The Big Five

👍 One: Let data lead your decisions

👍 Two: Having options allows you to counteract the volatility of the ad platforms

👍 Three: Big changes equal big outcomes. Avoid the cycle of marginal changes; marginal outcomes

👍 Four: Do not under explore and over exploit. Launching a new audience = exploration. Scaling spend on it = exploitation

👍 Five: Always. I repeat, always. Keep a change log!